Introduction

For any financial or commercial establishment, a branch is the important touch point with their customers. In addition, the majority of an enterprise workforce today work out of the various enterprise branch locations.

The traffic originating from enterprise or commercial branch locations has seen an exponential trend in the past few years primarily contributed by the increase in the number of network-connected devices at any branch location.

For example, in the year 2000, the number of network-connected per user averaged from 0 to 1, hence for a branch with 20 users, the total number of devices averaged to about 5 to 10. In 2020, that number for a small branch is projected to be close to 100 as the number of devices per user has increased to 6.8.

The primary drivers for this change are:

- Number of network-connected devices per employee

- Number of network-connected endpoints in a branch ( e.g. Wi-Fi endpoints, IoT endpoints, Video/Audio conferencing, CCTV.)

- Add-on services offered to the customers (e.g. Guest Internet access, Self Service Kiosks, AR/VR services)

Hence, the type of traffic generated by branches has moved from just application/client traffic to the data center to these new services which make up more than 80% of the traffic exiting the branch location.

Typical enterprise branch network is implemented in a hub-spoke model where all the traffic is sent to the Hub (Data Centre(s) or Cloud Services) where the core of the decision-making apps reside. This model is implemented in most of the enterprises as it is time-tested and works well by keeping the decision making capability in a central hub.

But as more add-on services are being rolled out to the branch, this model introduces various issues such as latency, very high bandwidth requirement and also government regulations where certain data cannot leave the country. For example, new services such as Biometric Authentication services, IoT endpoints brings in new challenges as sending data across the network to a central hub for every decision will ensure a bad customer experience as its directly proportional to the latency on the network.

Secondly sending all traffic to the Hub increases the bandwidth requirements on the MPLS which impacts the overall Capex/Opex of an enterprise.

With computers becoming a lot cheaper and with heavy data requirements for Big Data, IoT, AI, a method to solve this is by making the branch more intelligent and an enabler for decisions and relying on hub only for very critical processing, which makes it an interesting use case for Edge Computing. And, in addition by making the network more intelligent, the traffic could be steered dynamically between various branches depending on the available resources.

Architecture

To enable such an intelligent branch with dynamic workloads and agile branch network, we need three major components:

1.Kubernetes

2.SD-WAN

3.An Orchestrator

Both Kubernetes and SD-WAN are technologies which many enterprises are actively evaluating or already running in production. Hence, this use-case acts as a natural extension of these two well-known technologies.

Kubernetes consists of two components

Kubernetes Master nodes act as control and management plane for deploying containers

Kubernetes Worker nodes.

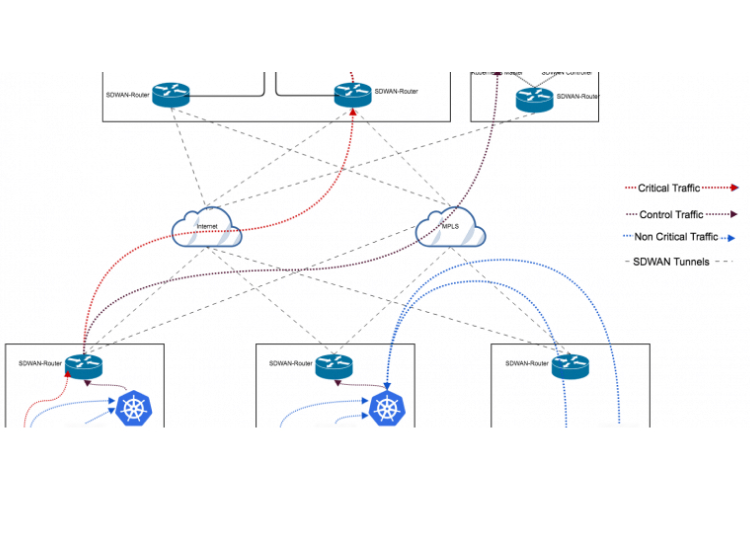

In this use case, Kubernetes master nodes are deployed in the Hub Sites and the Kubernetes worker nodes, are deployed on the computes in the branch sites.

For SD-WAN, a controller and management layer is deployed in the hub site with the data plane distributed in all of branch and hub sites.

Both Kubernetes Master and SD-WAN management layer expose the north-bound layer. An orchestrator is required to connect the API’s between Kubernetes Master and SD-WAN.

The orchestrator will be enterprise specific code, which is developed in-house.

The Interconnection between Kubernetes and SD-WAN enables an enterprise operations team to dynamically deploy container workloads on branch locations, and actively enabling right SD-WAN policies to help control and steer traffic based on the workloads deployed.

For example, of an authentication App is deployed in a branch, then authentication requests originating from remote or small branches closer to this branch can be steered towards it rather than sending it to the hub site. This change can be done by enabling dynamic policies on SD-WAN.

Considerations

To enable an Intelligent branch with edge computing, the following steps needs to be considered:

MicroServices and Containerization:

To initially deploy kubernetes and start rolling out apps, the first step is to assess the state of applications deployed in the enterprise, to understand which apps could be moved to MicroServices and containers are built out of it. This step is very important in understanding the current state of apps in an enterprise.

Kubernetes Deployment Architecture:

For most common use-cases, Kubernetes master and worker nodes are deployed in the hub site. When deploying the worker nodes in branch sites, the latency between master and worker, management of computing resources and security needs to be considered.

Identifying Branch Sites:

Not all branches would need to host its own Kubernetes workers. Depending on the type of application currently deployed on the kubernetes cluster. a single branch site in a geographic location can be chosen for deploying a Kubernetes worker and using SD-WAN policies and branches near to this site can be steered

Workforce Skills Transformation:

Since this use case attempts to connect SD-WAN with Kubernetes, which is currently managed by two different teams, the teams should be trained to understand both the technologies. Hence it becomes imperative for network engineers to understand kubernetes and for system engineers to understand SD-WAN.

Orchestrator:

Currently there is no vendor based software to integrate SD-WAN with Kubernetes. Since most SD-WAN vendors provide an API rich management layer, a simple orchestrator can be built in-house that maps applications deployed on Kubernetes to SD-WAN policies making it easier for the operations team to manage deployments from a single pane of glass.

PoC and Testing:

Before moving an application to this deployment, a true PoC needs to be performed. Since both Kubernetes and SD-WAN works in a virtual layer, virtual PoC can be performed by emulating the current enterprise network along with Kubernetes and the specified application. Once all the concerned teams have gained confidence that the specific app is able to work in this distributed setup, the teams can have the app deployed in a couple of branch sites to test out the performance and then rolling it out to other sites.

Summary

For an enterprise today, both Kubernetes and SD-WAN are technologies that are either already part of the roadmap or in evaluation or operational stage. The use-case of enabling an intelligent branch is an extension to both SD-WAN and Kubernetes along with enterprise-specific glue-logic. This edge computing use-case will enable enterprises to roll out next-generation services such as Big Data, IoT, Video, Mobility quickly and also helps in ensuring traffic reduction between branch and hub sites. Enterprises can make use of this use-case to also quickly scale branches and move applications and policies in a seamless manner without the operational overhead.